The Fourth Annual Kolmogorov Lecture

|

|

3rd February 2006

Royal Holloway, University of London

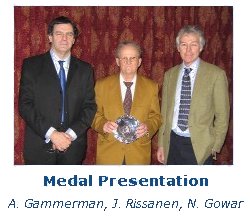

This annual University of London lecture celebrates the life and work of Andrei Nikolaevich Kolmogorov, one of the greatest mathematical and scientific minds of the last century. The lecture addresses current issues arising from the impact of Kolmogorov’s work in the fields of mathematical and computer science. Each Kolmogorov Lecture is given by one of the leading figures in their field, who is presented with a medal in recognition of their own contribution to science. The 2006 event was a great success drawing visitors from Europe and the United States.

The lecture at the Fourth Annual Kolmogorov Lecture was given by Professor

Jorma Rissanen, Professor Emeritus of Tampere University and was entitled

"The Structure Function and Distinguishable Models of Data."

In what has become a tradition, the lecture was immediately followed

by a wine reception in Royal Holloway's celebrated Picture Gallery.

Inspired by Kolmogorov's structure function for

finite sets as models of data in the algorithmic theory of information

we adapt the construct to probability models in a family of distributions

to avoid the noncomputability problem. The picture of modeling looks

then as follows: The models in the family have a double index, where

the first specifes a structure, ranging over a finite or a countable

set, and the second consists of parameter values, ranging over a

continuum. An optimal structure index can be determined by the MDL

(Minimum Description Length) principle in a two-part code form,

where the sum of the code lengths for the structure and the data

is minimized. The latter is obtained from the universal NML (Normalized

Maximum Likelihood) model for the subfamily of models having the

specified structure. This amounts to maximization of the posterior

if the universal model is taken as the Bayesian mixture.

The determination of the optimal model in the optimized structure is more difficult. It requires a partition of the parameter space into equivalence classes, each associated with a model, in such a way that the Kullback-Leibler distance between any two adjacent models is equal and that the models are optimally distinguishable from the given amount of data. This notion of distinguishability is a modification of a related idea of Balasubramanian. The particular model, specified by the observed data, is the simplest one that incorporates all the properties in the data that can be extracted with the model class considered. As an application we get a different treatment of hypothesis testing, in which the two sources of errors in the decision, errors due to sampling and estimation, are optimally balanced.

|

|

|

|

For further photos of the 2006 lecture, please click here.

Sponsors:

|

|

Tel/Fax : +44 (0)1784 443421 /439786